Quick Deployment of LLaMA-Factory

Overview

LLaMA-Factory is an open-source full-stack large model fine-tuning framework covering the pre-training, instruction fine-tuning, and RLHF (Reinforcement Learning from Human Feedback) stages. It has the advantages of high efficiency, ease of use, and scalability, and is equipped with LLaMA Board, a zero-code visual one-stop web fine-tuning interface. It also includes various training methods such as pre-training, supervised fine-tuning, and RLHF, supports 0-1 reproduction of the ChatGPT training process, and has rich Chinese and English parameter prompts, real-time status monitoring, concise model breakpoint management, and supports web reconnection and refresh.

Quick Deployment

Log in to the UCloud Global console (https://console.ucloud-global.com/uhost/uhost/create ). Select “GPU Type” and “High-Cost Performance Graphics Card 6” for the instance type, and customize details such as the number of CPUs and GPUs as needed.

Minimum recommended configuration: 16-core CPU, 64G RAM, 1 GPU.

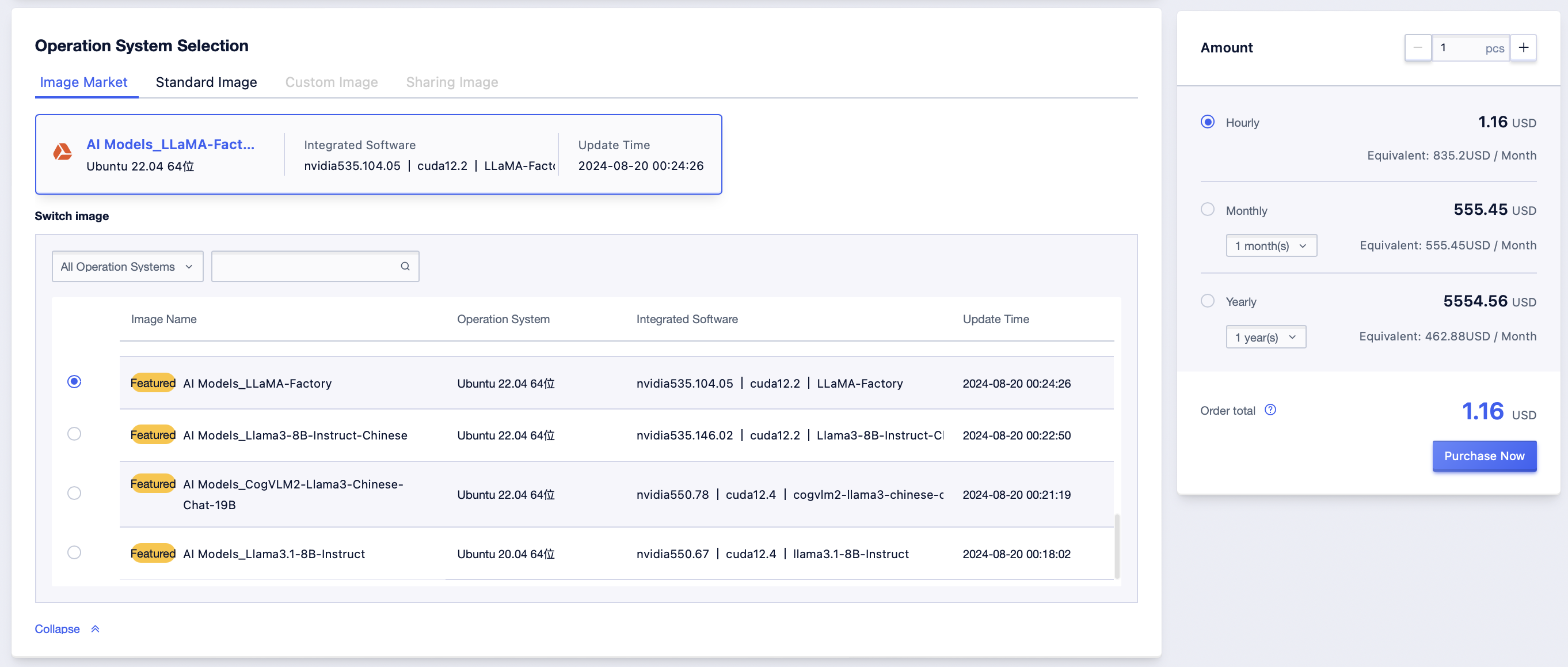

Select “Image Market” for the image, search for “LLaMA-Factory” for the image name, and select this image to create a GPU UHost.

After the successful creation of the GPU UHost, log into the GPU UHost.

Operation Practice

Visit http://ip:7860 through your browser. Please replace the IP with the external IP of the UHost, and check the security rules in the console if you cannot access it.

1. Loading Device

Note: The models in 1 and 2 must correspond one by one. The path in 2 is the path of the model downloaded on the local virtual machine.

2. Operation

3. Training Parameters

After training with the web UI, you can click Refresh Adapters to reload the local LoRA weights and specify the trained LoRA weights via the adapter path.

Once the weights are selected, the training will resume from the trained LoRA weights.

After selecting the weights, conversations will be conducted with the trained LoRA model.

Note: Due to the web UI’s path rules, it can only recognize LoRA weights trained by the web UI and cannot identify weights trained via the command line.

The Quantization bit needs to be set to 4, otherwise, the 24G video memory of the current recommended model will be tight.

Prompt template is bound to the corresponding model. As it will be brought out automatically after choosing the model name above, it does not need to be adjusted.

Choose the training dataset in the position in the picture, and click Preview dataset to preview the training data.

If you need to add a custom dataset, please refer to /home/ubuntu/LLaMA-Factory/data/README.md

Learning rate is between 1e-5 and 5e-4 (without changing the optimizer). It’s somewhat mysterious, you can try several learning rates when brushing up the score.

Epochs can be adjusted according to the loss image. For datasets smaller than 10,000 samples, start with epochs=3 and increase if the curve doesn’t flatten at the end. For datasets over 60,000 texts (avg. ≥20 characters per text), set epochs between 1-3.

The recommended value for Maximum gradient norm is between 1-10.

Max samples are to limit the use of the first few entries of the dataset. Use full dataset for training; set to 10 during testing to reduce validation time.

Cutoff Length determines the maximum length for truncating training sentences. Longer sentences require more GPU memory; if memory is insufficient, consider reducing this value to 512 or even 256. This parameter should be set according to the sequence length requirements of your fine-tuning task. Note that after fine-tuning, the model’s performance may degrade on sentences longer than the specified cutoff length.

Batch Size affects training quality, speed, and GPU memory usage. For optimal training quality, the product of Batch Size * Gradient Accumulation Steps should generally fall between 16 and 64, which represents the effective batch size. Larger batch sizes (e.g., up to 4090 for modern GPUs) can accelerate training but increase memory consumption. As a rule of thumb, a batch size of 4 is often the upper limit for memory-constrained setups; reduce this value if encountering out-of-memory errors.

For extremely long sequences (e.g., 2048 tokens), use Batch Size=1 with Gradient Accumulation=16. Gradient accumulation does not impact memory usage directly but increases the effective batch size, thus influencing training stability and convergence.

Save steps determines how often the model checkpoint is saved during training, enabling recovery from unexpected interruptions. The total number of training steps is calculated as epochs × total_samples ÷ batch_size ÷ gradient_accumulation, which is also displayed in the training progress bar. Each checkpoint saves the full model weights, typically occupying dozens of gigabytes (GB) of storage.

Recommendation: Set Save Steps to 1000+ to avoid rapid disk space depletion. After training completes, checkpoints can be deleted from the directory: /home/ubuntu/LLaMA-Factory/saves/ChineseLLaMA2-13B-Chat/lora/…

The Preview command generates an equivalent command for running in the terminal. The Output dir specifies where training results and checkpoints will be saved. After configuring all parameters, click Start to initiate training directly. Alternatively, copy the generated command and execute it in the llama_factory Conda environment under the /home/ubuntu/LLaMA-Factory directory.